|

Advertisement / Annons: |

Tutorial:

|

|

Content: |

|

1: Efficiency(I continuously update and correct) Have you ever thought about how efficient you collect/detect photons? Every lens surface transmit about 99%, every mirror surface reflect about 92%, it could be worse or it could be better. Here is more to read: But there is a system of optical parts and together they make up significant losses.

And then there is also limitations in the sensor efficiency, the QE. And you also loose time between every sub image you take. I have collected this losses in a table so you can see the scary result.

|

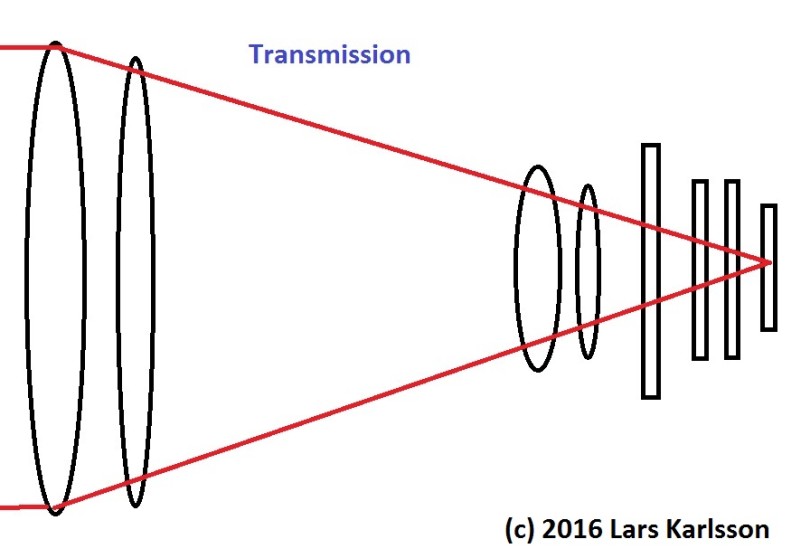

2: Transmission efficiencyComparing two kind of telescopes and assuming the same opening and focal length, they are setup with a high quality corrector to make a astrograph of it. Optimized for wide field deep sky. Just a relative comparing. Also assume a monochrome camera with filter wheel to make rgb color images. Note. Each lens or filter have two surfaces. Each line is the contribution of efficiency.

It doesn't look too bad, we have a throughput of about 82% of the photons in the refractor and 62% in the reflector. Bit it's a bit worse, this was at the center of sensor, vignetting problems maybe will reduce it by half at the corners. But is this the whole true story?

|

3: QE, Filter loss, Time efficiencyNo, we have also the camera efficiency, the QE. And then we have the rgb filter, each transmit about 33% of the visually wavelengths range (which is the idea to make color images), with todays cameras you can only take one color for each exposure. Then we also have the time efficiency, between every image there is a delay, maybe 10% of the exposure time.

Look, just 12% or even less for the reflector!

|

4: ConclusionWith 100% efficiency we could have 10 times more detected photons in the same hours we spent an evening to take astrophotos. Does it have to be like this? With a camera like the Foveon that take all three color parallel it will be a huge difference, but what I know this cameras have a lot of other disadvantages that don't make them usable for astrophotography, maybe in future. Just have to wait for better technology. By the way, DSLR single shot color cameras are not better, they just use every fourth pixel for each red and blue color, and every second pixel for each green pixel.

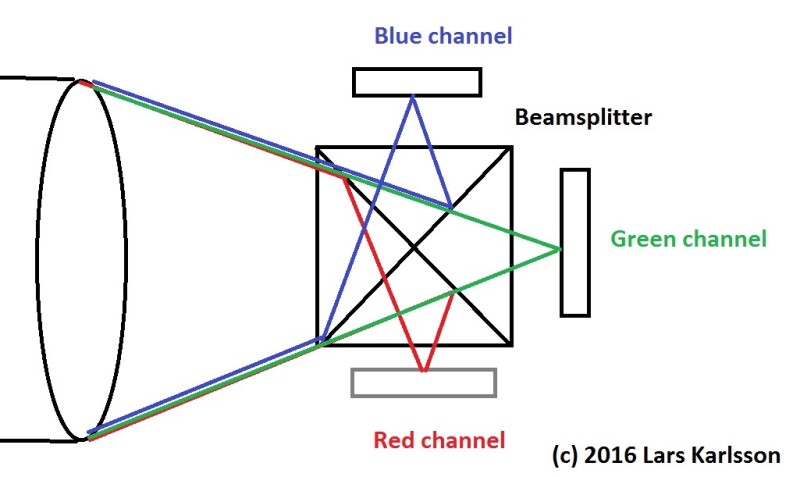

Another way to solve it is to have a beam splitter as older video cameras had. But then you also need three sensors, little bit expensive but will give three times more photons per time unit (if the beam splitter is 100% efficient). Another advantage is that you can have a separate focus for each color channel. But as usual when something looks very good there are some problems, needs very long back focus and the beam splitter isn't easy to manufacture. Here is more to read about beam splitters: Narrowband images is a little bit different, to do that in parallel with high wavelength resolution, like a 2 dimension spectrograph that delivers data cubes (three dimension data). RGB color images do that but just with three planes of data. I don't see any technology in the nearest future to make this more efficient. Could the QE of cameras be better? Yes it increase almost for every new camera/sensor they develop. Note. If the telescope could handle a large image circle. A full frame sensor collects about the twice as many photons compared to a APS-C sensor, twice the surface. Less need of mosaicing and then save time.

|

| Go Back |